Systematic Review Protocol

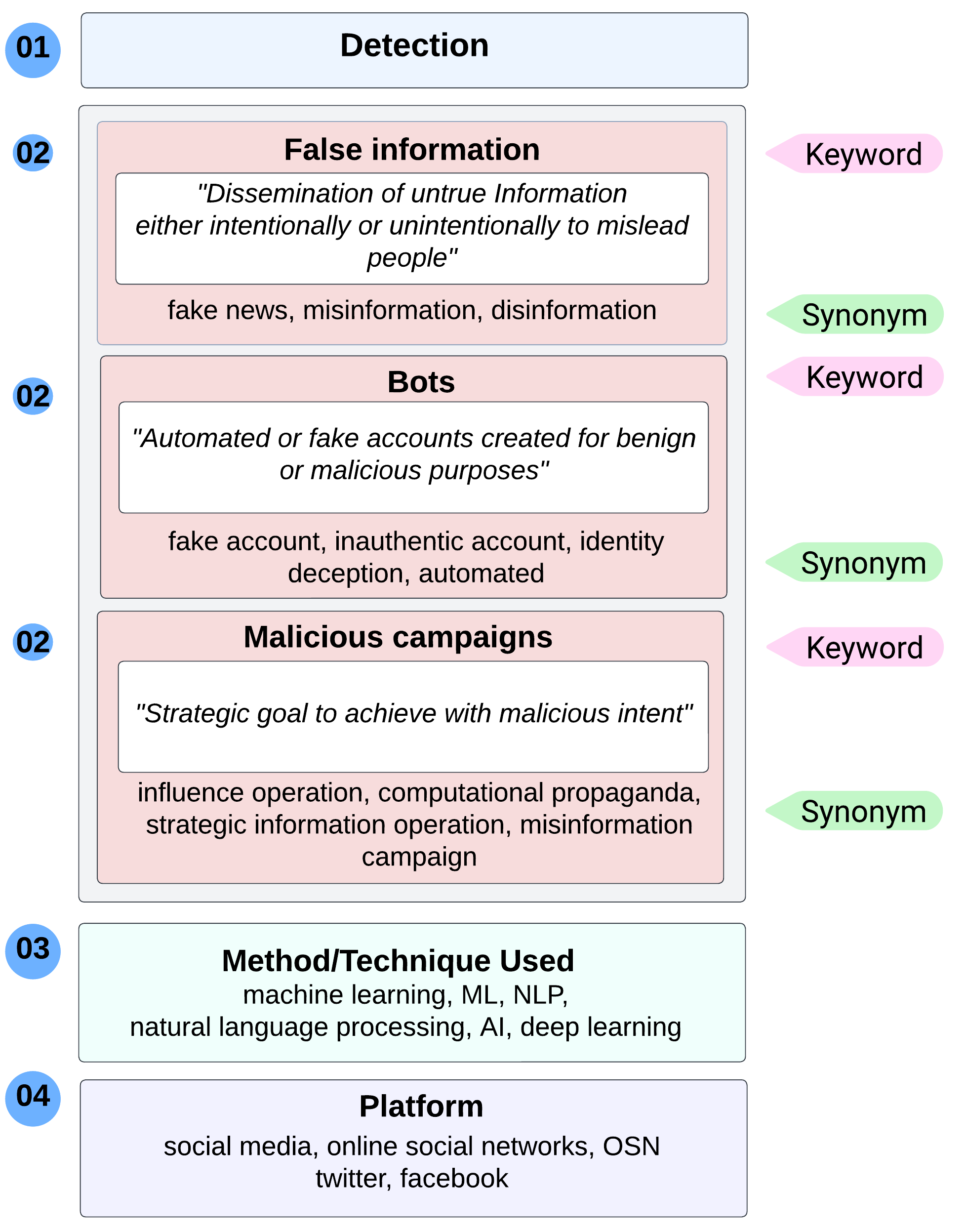

This research study covers three types of SMMs, (i.e., false information, bots, and malicious campaigns). As mentioned in Section 2, we searched and selected papers following a systematic literature review (SLR) process. We form search words for the three respective SMM's to query separately from databases such as Scopus, IEEE Digital Library, ACM Library, and Google Scholar. In order to query papers from these databases, we combine keywords in four dimensions: purpose, type of SMM, method, and used platform.

First, we start by querying false information-related articles; we use the search terms with keywords such as

Finally, we combined all three forms and retrieved the final set of papers.

Since SMM is a recent threat in the context of OSN, we kept the timeline for search queries from 2015 to 2022 to cover the past half-decade and the current work in SMM. In some places, we use papers before 2015 (publication year) to support a few definitions and to provide the necessary background. We first exclude papers that do not pertain to the chosen SMM factors or are not written in the English language. Next, we analyze papers by reading their title and abstract to filter out the most notable works.

Next, we proceed to our second-level reading process using a set of criteria. The criteria include looking into aspects such as the category of false information type (fake news, rumor, hoax, propaganda, phishing), as all are commonly used definitions for false information. Other criteria include the OSN platform (such as Twitter and Facebook), the method or technique used, and the most prevalent features in the detection process. The bot type categories (spambot, social bot, follower bot, scam bot), with other aforementioned criteria, define the analysis criteria for bot detection-related articles. Likewise, we analyze malicious campaign detection papers on the defined criteria. This results in 34 papers for analysis in false information detection, 35 in bot detection, 28 in malicious campaign detection, and 3 in all three forms combined.